Artificial Intelligence and its potential impact on all parts of society and the businesses of tomorrow is a hotly debated topic these days. But what is the role of AI and Large Language Models in the world of translation, and, more specifically, translation in the Nordic languages? Read on to learn more about the possibilities and challenges of using AI for translation.Â

ChatGPT and the potential of AIÂ

We have all heard about ChatGPT (short for Chat Generative Pre-Trained Transformer) and how it can produce human-like responses to a multitude of questions and queries. The output can even include human-level factual errors, amusingly known as “hallucinations”. If you have tried it yourself, you may have noticed this phenomenon, which occurs for a number of reasons, not least lack of knowledge of events after September 2021, the fact that it doesn’t learn from experience and it can accept obviously false statements from users as true. Â

Still, the emergence of this engine in November 2022 has certainly changed public perception of the potential of AI and natural language processing (NLP), including fears that they will be used to write exams and essays. These concerns will only worsen with the emergence of more extensively trained models.Â

The Large Language ModelsÂ

When using ChatGPT or GPT-4, the public is interacting with a Large Language Model (LLM), which is just one of several types of language models:Â

Zero-shot model: A large, generalised model trained on a generic corpus of data and able to give a reasonably accurate result for general use cases, without the need for additional training. This is what GPT-4 is regarded to be.Â

Fine-tuned or domain-specific model: A zero-shot model with additional training produces a fine-tuned, domain-specific model. An example of this is the OpenAI Codex, a domain-specific LLM for programming based on GPT-4.Â

Language representation model: This model makes use of deep learning and transformers well suited for NLP. An example is Bidirectional Encoder Representations from Transformers (BERT). Â

Multimodal model: While many LLMs are trained just for text, the multimodal model can handle both text and images. An example of this is GPT-4.Â

As the computational social scientist, Bernard Koch, said in a recent interview: “One reason these large language models remain so remarkable is that a single model can be used for tasks including question answering, document summarization, text generation, sentence completion, translation and more.”Â

It is this generalist potential that is firing up the imagination of professionals in the AI industry and beyond. But how does it work, and can it really be used with confidence to produce human-quality translations?Â

Parameters and machine learningÂ

The way a Large Language Model (LLM) works is that rather than training it to do a particular task, it has a general framework with billions of parameters. Â

The first LLM, BERT, came out in 2018 with 100 million parameters, and then GPT-2 with over a billion parameters. From there, the growth has been exponential, with GPT-4 reaching a trillion parameters.Â

What exactly are parameters in Large Language Models? There are two types: hyperparameters and model parameters. The former can be compared to rules or instructions set by the user before the model starts the “learning” process. Model parameters are then what the model “learns” from the training data fed to it. How well it functions depends on the algorithms for integrating this data and developing these parameters. Â

As one technology blog explains, “Parameters fundamentally equip a model with prediction capabilities.”Â

For non-technical readers, these parameters are like buttons and knobs that can be pushed and turned to fine-tune how the system responds to the data it is fed and how well it completes the desired tasks. Hyperparameters are the buttons and knobs put in place by the designer whereas model-parameters are the buttons and knobs that emerge as the model is trained on data.Â

The more parameters, the more fine-tuned the model will be in terms of producing the appropriate output when prompted.Â

Language Models and translationÂ

Apart from plaguing teachers across the world with AI-generated essays, what can LLMs be used for in other industries? One area in which they may play a revolutionary role is in the field of translation. Â

Translation is all about text, so an AI engine built specifically for the production of general text should be perfect for translation-related work. Indeed, the general LLMs have shown themselves to have “outstanding potential”, according to a study from May 2023. Â

Feed me, Seymore!Â

For LLMs to develop billions or trillions of parameters, they need a large amount of data from which to generate these rules. For this reason, the larger the corpus (a large and structured set of texts) of a language, the better the quality of the machine translation. Â

This means that the system can recognise the difference between words in different contexts, such as proper nouns (names) that are the same as common nouns.Â

In this example, the German noun “MĂĽller” can be a miller, but it can also be the surname MĂĽller.Â

Ein MĂĽller namens Markus MĂĽller hat fĂĽnf Jahre Erfahrung. Sein Bruder Karl MĂĽller ist ebenfalls MĂĽller. Die ganze MĂĽller-Familie sind MĂĽller. Der Vater, Hans, ist MĂĽller, die Mutter Helga ist MĂĽllerin, der ältere Bruder Otto ist MĂĽller. Alle MĂĽllers sind MĂĽller.Â

This is what ChatGPT produced when asked to translate it into English:Â Â

One miller named Markus MĂĽller has five years of experience. His brother Karl MĂĽller is also a miller. The entire MĂĽller family are millers. The father, Hans, is a miller, the mother Helga is a miller, the older brother Otto is a miller. All the MĂĽllers are millers.Â

Although the MĂĽller family may not be particularly inventive in their career choices, the differentiating between noun and proper noun (name) is quite impressive here, particularly because nouns in German are capitalised just like names. Â

German is one of the larger languages, but let’s take Norwegian, of which there are 5 million users, not much more than the number of people who use London Public Transport on a typical day.Â

Here, it’s the profession of baker and the name Baker that we set as the challenge:Â

Tom Baker is a master baker. He is part of the Baker family who are all bakers and work in Bakers & Co, the family firm of the Bakers. The bakers in Bakers are all highly qualified bakers. One baker, John Baker, has been a baker for 23 years. All the Bakers are bakers.Â

ChatGPT:Â

Tom Baker er en mesterbaker. Han er en del av Baker-familien, som alle er bakere og jobber i Bakers & Co, Baker-familiens firma. Bakernes i Bakers er alle svært kvalifiserte bakere. En baker ved navn John Baker har vært baker i 23 ĂĄr. Alle Baker-ene er bakere.Â

Here it struggles a little bit with “bakers in Bakers” and “Bakers are bakers”, which are not rendered correctly. However, the result is still pretty good in terms of distinguishing between proper and common nouns, a recurring problem in machine translation. Â

AI and contextÂ

One reason why a Large Language Model like ChatGPT can produce fairly accurate output, even with tricky texts such as the above, is that they mimic a process carried out by human translators. Â

A professional translator will usually take several initial steps when preparing to translate something, such as researching the overall topic, considering the context, looking at the company in question, as well as previous translations, term lists, etc.Â

A recent study by Chinese researchers examined how this approach can be systematically used to further improve LLM translation. They called it the MAPS framework (Multi-Aspect Prompting and Selection).Â

The process consists of three stages: “(1) Knowledge Mining: the LLM analyzes the source sentence and generates three aspects of knowledge useful for translation: keywords, topics and relevant demonstration. (2) Knowledge Integration: guided by the different types of knowledge separately, the LLM generates multiple translation candidates. (3) Knowledge Selection: the candidate with the highest QE (reference-free quality estimation) score is selected as the final translation.” (My bolding).Â

Perfecting this functionality can improve the usefulness of AI, as this approach reduced “… up to 59% of hallucination mistakes in translation.”Â

What about rare words?Â

As mentioned above, smaller languages yield smaller corpora to train the engine on, and the same is true of infrequently used words in any language. Seldomly used words can end up being mistranslated or not translated at all. In a paper published by Cornell University in February 2023, three researchers came up with a solution to this challenge: DiPMT or Dictionary-based Prompting for Machine Translation.Â

In essence, this approach involves training the engine on dual-language dictionaries in addition to general corpus text, including domain-specific dictionaries, such as financial or medical dictionaries. DiPMT then uses prompts to indicate how a word is used in a particular context.Â

The authors claim that DiPMT improves translation significantly both for low-resource and out-of-domain translation. They go on to discuss how having enough data is crucial for an LLM to function well. This is not often the case with smaller, specialist domains as well as for languages with smaller text corpora, as mentioned previously. Training the model on dictionaries with the addition of human feedback as to the correct term for the context, the DiPMT approach, has proven an efficient remedy for this weakness.Â

What about the Nordics?Â

As the languages of the Nordic region are less widely spoken, they present a unique challenge to these LLMs. Smaller languages lack the large quantities of data that train these models to produce high-quality output. In these languages, is it possible to achieve the same quality or usefulness? Â

AI Sweden has been working to answer just that question over the past few years.Â

Due to the enormous costs and resources needed, AI Sweden joined forces with supporting organisations, including the Research Institutes of Sweden (RISE) and the Wallenberg AI, Autonomous Systems and Software programme, to build a home-grown Swedish model.Â

Dr. Magnus Sahlgren, Head of Research for Natural Language Understanding at AI Sweden, explains that the main bottleneck for the Swedish researchers is data, as Swedish is much less widely spoken than other languages. Â

But the researchers solved this problem by taking advantage of the fact that Swedish is typologically similar to the other languages in the North-Germanic language family. By combining data from Swedish, Norwegian, Danish and Icelandic, as well as English and code, they gained access to far greater amounts of data. They called this The Nordic Pile.Â

A particular advantage of building a new Swedish model based on this group of Nordic languages is that it can later be used as a starting point for one of the other languages, such as Icelandic.Â

AI and data services for natural language processing

Practical limitations with Large Language ModelsÂ

There are several reasons why professional translation companies such as Sandberg cannot easily take advantage of LLMs in their current form. Â

Firstly, we deal with proprietary texts that our clients own, so we cannot feed this into an external AI-bot owned by another company or government agency.Â

We make sure that our clients’ texts are treated carefully, according to the clients’ privacy requirements, following all relevant laws and regulations and abiding by high ethical standards. Â

Secondly, the cost threshold for any business to build its own LLM is also significant, to say the least. Microsoft invested USD 1 billion in 2019 and another 10 billion in 2023 in Open AI’s Chat GPT-3 and ChatGTP venture. Developing GPT-4 is reported to have cost USD 100 million, and the training consumes enormous amounts of electricity, computing power and storage capacity.Â

This is why the Swedish developers had to build a broad coalition of partners, even though they were backed by the Swedish government.Â

Nevertheless, at Sandberg we follow the development of any language-related or translation technology very closely. We have been leaders in using and developing internal high-quality Nordic neural machine translation systems that ensure higher efficiency within a quality-assured and secure process.Â

Megan Hancock, for many years a Translation Project Manager and now Specialist Project Manager, explains what the Sandberg approach to machine translation is:Â

“We’re equipped with over 40 neural machine translation engines which are trained on a carefully curated set of data that enables us to apply it on the full range of domains and text types that we handle in our day-to-day work, from mechanical engineering to marketing or business-oriented content. All Sandberg’s MT engines translating in either direction between the Scandinavian languages and English have an edit distance of less than 20%. We are also fully compliant with the ISO 18587 certification for the post-editing of machine translation output.”

What’s edit distance?

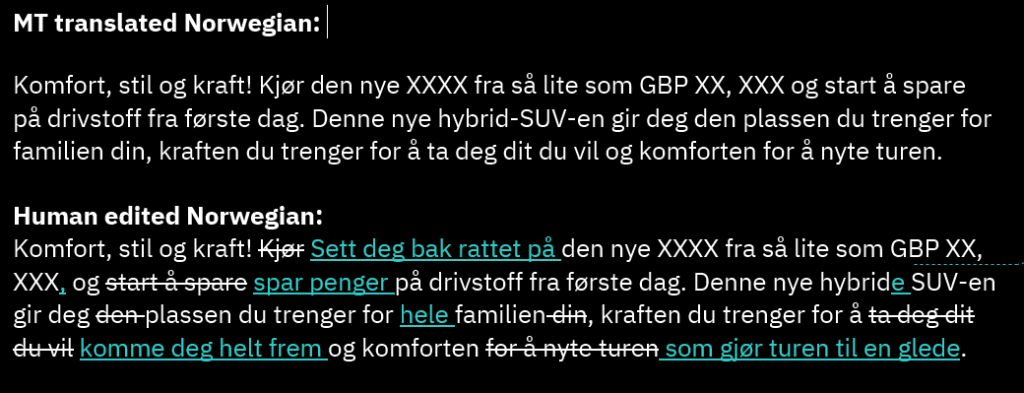

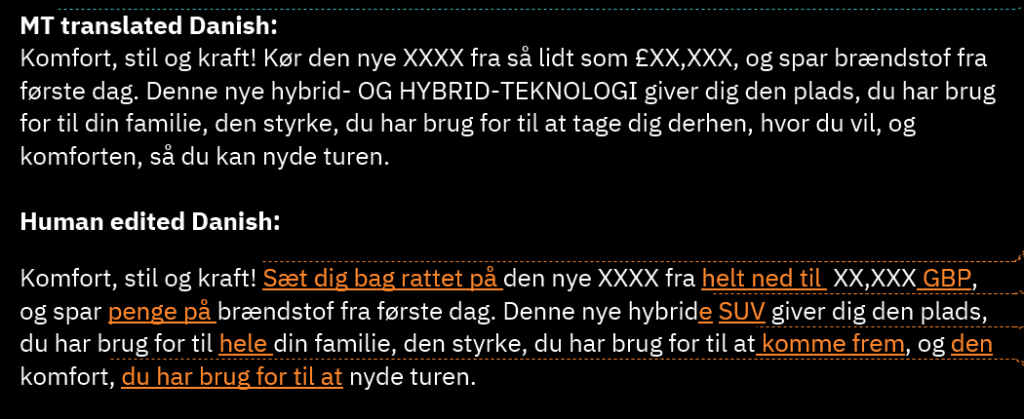

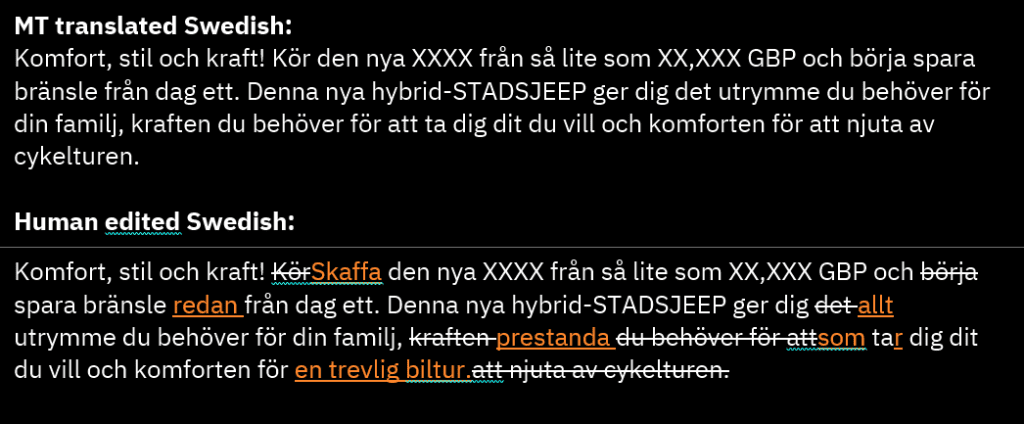

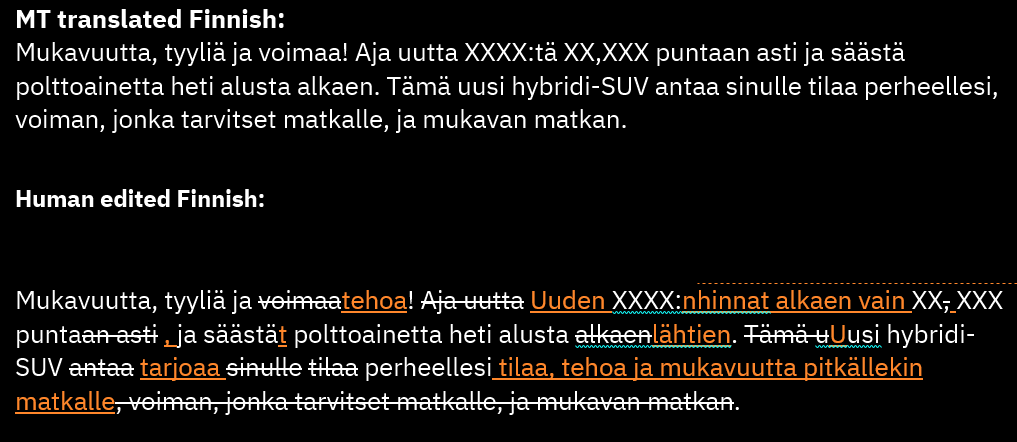

To give a practical example, we have written a source text inspired by the text types that we often translate. Let’s see how our engines deal with it.Â

A typical marketing text may go something like this:Â

Comfort, style and power! Drive the new XXXX from as little as ÂŁXX,XXX and start saving on fuel from day one. This new hybrid SUV will give you the space you need for your family, the power you need to take you where you want to go and the comfort to enjoy the ride. Â

Our engines gave us the following results for these Nordic languages:Â

For those who are familiar with any of these Nordic languages, you will see that the translations are far from perfect. However, they do provide a human translator with a rough translation that they can tweak into a fully polished text, with less effort and time spent than translating it from scratch.

Will AI take over translation?

Will AI take over completely? It doesn’t seem like we are getting anywhere near that point just yet. Most language service providers in the world are already equipped with machine translation engines that provide rough translations that professional linguists then post-edit. While AI output can also be edited by humans in the same way as MT, both kinds of technologies can fall dangerously short when dealing with culturally sensitive content like marketing campaigns.

Still, investing in new AI technologies and further optimising translation workflows will become more frequent as soon as AI generates consistently better outputs than MT across languages and domains. As for the moment, research shows that AI models achieve competitive translation quality only for high-resource languages (where there are vast amounts of data), while having limited capabilities for low-resource languages.

Degeneration danger

Another reason why artificial intelligence is not ready to take over is a phenomenon known as “model collapse”. This is when AI trains on AI-generated content and degenerates as a result, a little like the increasingly bad quality you would get if you took a photocopy of a photocopy of a photocopy, etc.

The first LLMs were all trained on existing written material, almost all of it produced by humans. But as the amount of AI-produced text grows, it becomes part of the data used to train AI engines, reinforcing errors and leading to a deterioration in output quality.

One of the authors of a recent study into this, Ilia Shumailov, said in an interview with VentureBeat: “We were surprised to observe how quickly model collapse happens: Models can rapidly forget most of the original data from which they initially learned.”

This is not all bad – it means that human content creators will still be needed in the future, even if it’s just to produce high-quality content to train AI.

There is no doubt that LLMs will play a significant role in the future of translation. Exactly how we will balance the need for data protection, copyright and confidentiality with the efficiency that LLMs offer remains to be seen. But for now, there is still a clear need for the human creator, editor or translator to play their role in the process.